Integrating Multi-Modal Imaging and Computational Techniques for Enhanced Tumor Characterization in Oncology

Authors :

Faizan Sheikh and Sameer Patil

Address :

Department of Data Science, Aligarh Muslim University, India

Department of Machine Learning, Jamia Millia Islamia, India

Abstract :

Brain tumor characterization is vital for accurate diagnosis, treatment planning, and prognosis in oncology. Traditional imaging techniques like CT (Computed Tomography), MRI (Magnetic Resonance Imaging), and PET (Positron Emission Tomography) provide valuable information. Still, each modality has spatial resolution, sensitivity, or functional insight limitations. This reduces the precision of personalized treatment strategies and makes it challenging to assess treatment response and early detection. This study proposes a novel framework, namely MMIETC, integrating multi-modal imaging (MMI) data such as MRI, CT, PET using state-of-the-art methods for machine learning, including convolutional neural networks (CNNs) for automated feature extraction and tumor segmentation and random forests (RF) for Enhanced Tumor Characterization (ETC). Image processing algorithms like wavelet transforms will also be employed for enhanced segmentation and feature fusion. The study will focus on designing a unified computational framework that can accurately extract anatomical, functional, and molecular features from the imaging data, improving diagnostic precision. Integrating multi-modal imaging with CNNs for deep learning-based segmentation and random forests for predictive analysis is expected to yield significantly improved tumor characterization. The proposed approach should enhance tumor margin delineation, detect intra-tumor heterogeneity, and identify biomarkers for more accurate diagnostic and prognostic evaluation. These advancements will lead to better treatment planning, more personalized therapies, and improved patient outcomes in oncology.

Keywords :

Multi-model imaging, machine learning techniques, convolutional neural networks, random forest, feature extraction, biomarker identification, feature fusion.

1.Introduction

With approximately 400,000 cases recorded in the US between 2012 and 2016, primary diseases affecting the brain and spinal cord are among the top cancer incidence rates among patients of all ages [1]. Brain tumors can develop either from native brain tissue or from metastasis, a process by which malignant cells from other organs travel to the brain, where they accumulate abnormally. A thorough approach is required to diagnose brain tumors, which often includes biopsies, imaging studies, to determine the tumor's grade and features [2]. The development of more accurate neuroimaging methods has led to a dramatic improvement in the noninvasive characterization of brain tumors throughout the last five years. Tumor-type discrimination has been achieved using a variety of methods used in MRI scans, such as dynamic susceptibility contrast, perfusion imaging, magnetic resonance fingerprinting, apparent diffusion coefficient (ADC) value mass spectroscopy, and others [3]. Both the initial diagnosis and the monitoring of brain tumors rely heavily on cross-sectional imaging techniques. The usual course of treatment includes CT and MRI for tumor detection, mapping, extent determination, and posttreatment monitoring [4]. In the medical image domain, imaging segmentation is employed to partition the image into two sections. Spitting out an image representation makes it more suitable for analysis. This is because there are separate parts to the picture [5]. Due to their late onset and lack of symptoms, gliomas are difficult to identify in their early stages. For most patients, the key to a better prognosis is the precise removal of these malignant tumors [6].

The detection of tumors in human organs relies heavily on segmentation. Conventional approaches could be more effective in many cases. Deep learning techniques enable automatically optimized feature extraction, which allows them to surpass conventional machine learning-based approaches, which have demonstrated outstanding performance in complicated issues [7]. In fields as diverse as medical image analysis, face recognition, object identification, and picture categorisation, CNN has accomplished exceptional results across various fields, reshaping the image recognition landscape [8]. Automated feature extraction from images is within the capabilities of a CNN. A convolutional neural network (CNN) can achieve good recognition accuracy with very minimal training material [9]. Due to improvements in medical picture classification technology, new disease features and underlying mechanisms have been discovered, which has also led to more efficient and accurate illness detection. Consequently, these technological advancements have greatly improved therapy methods and patient survival rates [10]. The potential for imaging biomarkers to further precision diagnostics is exciting. Quantitative picture acquisition and analysis are closely related to creating imaging biomarkers [11]. As researchers drew from many different fields, such as communications, mathematics, computer science, signal processing, quantum physics, image processing, and more, wavelet transformation emerged as one of the most intriguing developments of the past decade [12].

This work proposes a new computational framework, MMIETC, incorporating multi-modality imaging with CNN for deep learning-based feature extraction and segmentation and Random Forests for predictive biomarker identification to overcome the limitations of conventional imaging and ensure maximum utilization of the full potential of MMI. This paper will present a framework for a holistic understanding of brain tumor characteristics by fusing anatomical, molecular, and functional information from MRI, CT, and PET modalities. The resultant insights will lead to improvement in tumor margin delineation, detection of intra-tumor heterogeneity, and predictive biomarkers diagnostic and prognostic assessment.

The key significance of this study is

The follow-up sections of this work are structured as follows. Finding and characterising brain tumours is covered in Section II. The suggested procedure is detailed in Section III. Datasets, analysis of the suggested approach, and substantial experimental findings are presented in Section IV. The conclusion of this work is in Section V.

2. Related work

Lee et al. [13] suggested combining sophisticated three-dimensional tumor models with artificial intelligence (AI) to improve tumor microenvironment replication and enable individualized therapy. Combining artificial intelligence (AI) with cancer-on-chip models and microfluidic devices enable high-throughput, real-time tracking of carcinogenesis and biophysical tumour features. According to the results, AI outperforms conventional two-dimensional techniques in improving the accuracy of tumor model creation. Nonetheless, a significant drawback is the difficulty of precisely simulating tumor heterogeneity and microenvironment dynamics, which is still a problem in applications of customized treatment.

By applying sophisticated MRI techniques for preoperative glioma assessment, the GliMR COST action seeks to overcome the limitations of conventional MRI in detecting diffuse gliomas and tumor genotyping (Hangel et al. [14]). Techniques including chemical exchange saturation transfer (CEST), magnetic resonance spectroscopy (MRS), and MR-based radiomics were among those that were investigated. Tumor characterisation is improved by these techniques, although there is still a lack of clinical confirmation. Despite their full potential, the difficulties in standardizing and interpreting these sophisticated MRI techniques make integrating them into standard clinical practice difficult.

Foti et al. [15] demonstrated the cancer screening with dual-energy computed tomography (DECT) offers promising results by allowing for detailed tissue characterisation using two separate X-ray energy bands. Several methods have been developed to enhance lesion identification, material composition analysis, and reduce iodine dose and artefacts. These methods include iodine density maps, virtual non-contrast (VNC) pictures, and virtual monoenergetic imaging (VMIs). For tumor diagnosis, staging, and post-treatment assessment, DECT is useful. Although very promising, there are some drawbacks, such as the requirement for additional clinical validation and possible interpretation variability in images.

Hirschler et al. [16] aimed to increase knowledge of sophisticated MRI methods for preoperative glioma evaluation beyond traditional MRI, which cannot identify tumor genotype and has trouble defining diffuse gliomas. Diffusion-weighted MRI, magnetic resonance fingerprinting, and dynamic susceptibility contrast are some of the methods covered. Although tumor characterisation is improved by these techniques, there is still a lack of clinical confirmation. Because of problems with standards and practical implementation, integrating these sophisticated MRI techniques into standard clinical practice is the primary obstacle.

The application of AI to PET imaging with tracers other than [18F] F-FDG is examined by Eisazadeh et al. [17], with particular attention paid to radionics features obtained from tracers such as [18F] F-FET, [18F] F-FLT, and [11C] C-MET. These tracers help patients with gliomas discover lesions, characterize tumors, and predict their chances of survival. There is also potential for using AI-based PET-volumetry to direct adaptive radiation treatment. However, despite the improved diagnostic performance provided by PET-derived radiomics, the necessity for additional validation and standardization across various tracers and tumor types limits its practical applicability.

An ensemble model for heterogeneous deep learning was suggested by Jadoon et al. [18] to forecast breast cancer using multi-modal data, including copy number variation (CNV), clinical, and gene expression data. The model consists of three stages: feature extraction, feature stacking, and prediction using a random forest. The feature extraction takes place using convolutional neural networks (CNNs) for clinical and gene expression data, and DNNs for CNV data. The results demonstrate higher accuracy compared to homogeneous and single-modal models. Nevertheless, drawbacks include difficulties in combining and maximizing the many data kinds across modalities.

To circumvent the challenges of merging pathological images and genetic data, Waqas et al. [19] proposed an attention-based multi-modal network for accurate prediction of breast cancer prognosis. The model successfully captures interactions between different modalities and within modalities using intra-modality self-attention and inter-modality cross-attention modules, all without generating high-dimensional features. An adaptive fusion block improves the integration of these relationships. When compared to current approaches, the results demonstrate improved prediction performance. However, the intricacy of modelling both linkages and guaranteeing practical computation still hampered enormous applicability.

Khalighi et al. [20] explored how artificial intelligence (AI) revolutionises neuro-oncology, specifically gliomas, emphasising how AI can improve diagnosis, prognosis, and treatment. AI models that use imaging, histopathology, and genetic data perform better than human assessments regarding accuracy and molecular detection, which could lead to a decrease in invasive diagnostic procedures. Methods vary from deep learning to standard machine learning, with difficulty integrating data and dealing with biases. Although AI holds promise for enhancing individualised treatment plans, ethical considerations regarding openness and fairness remain obstacles.

3. Proposed Scheme

The proposed MMIETC framework uses multimodal imaging, namely MRI, CT, and PET data, and advanced machine learning techniques for brain tumor characterization. The steps involve image acquisition, followed by registration and pre-processing to align the data, after which normalization occurs. The automatic feature extraction uses CNNs, while wavelet transforms enhance the spatial-frequency features. These modalities' features are combined to provide complementary information on anatomy, function, and molecular composition that could enhance tumor margin delineation and intra-tumor heterogeneity detection. Biomarker identification and predictive analysis are done using Random Forest models, ranking the prognosis and treatment response features. This integrated framework will feature accurate diagnosis, personalized treatment planning, and monitoring of therapy outcomes with high precision. Quantification has been performed with different metrics, such as the Dice coefficient and Jaccard index, to ensure robustness. The MMIETC framework integrates deep learning-based segmentation with RF for predictive modeling to improve diagnostic precision, optimize therapies, and, ultimately, improve patient outcomes in oncology. Figure 1 shows the Methodological Flow of the Proposed MMIETC Approach.

Image registration aligns multiple imaging modalities (MRI, CT, PET) into the same spatial coordinate system for proper comparison and fusion.

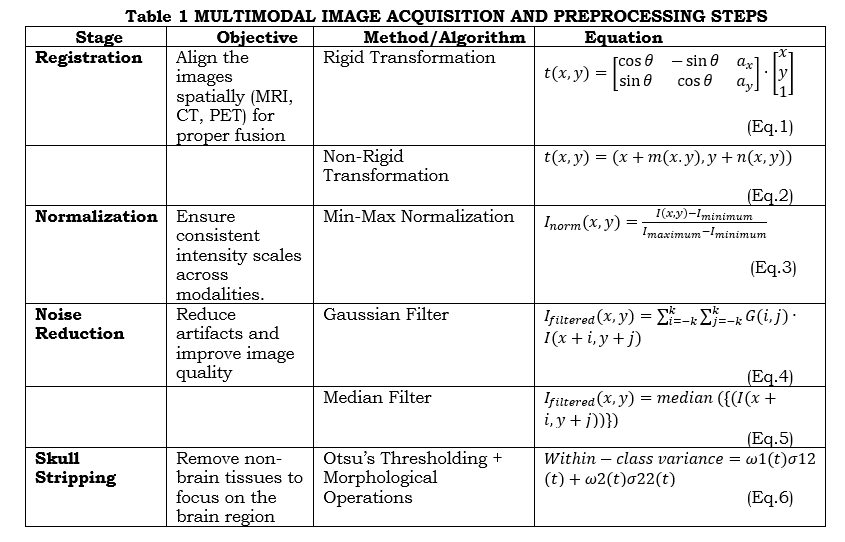

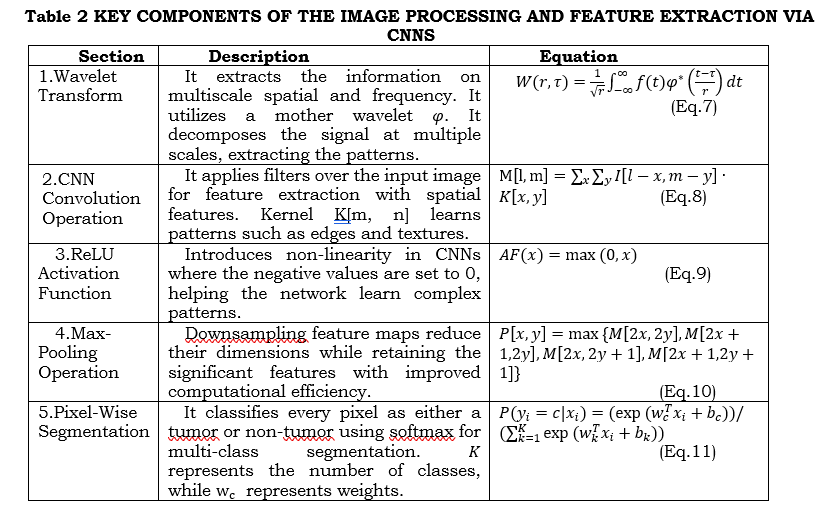

Preprocessing and Multi-Modal Image Acquisition: The registration of MRI, CT, and PET brain images and quality enhancement given further processing. In image registration, rigid and non-rigid transformations align the modalities in space. This can be done by equation (1) and (2). Normalization standardizes the range of intensities across images by using min-max scaling, which can be done using the equation (3). Noise reduction cleans artifacts through Gaussian by equation (4) or median filters by equation (5) to retain important details. Skull stripping will remove the non-brain tissues by applying Otsu thresholding and morphological operations that isolate the brain's region of interest. This skull stripping can be done by the equation (6). Such pre-processing steps ensure the data is aligned, consistent, and noise-free for further precise segmentation, feature extraction, and analysis of tumors. Table 1 shows the multimodal image acquisition and preprocessing steps.

where t(x,y) is the transformed coordinates, θ refers to the rotation angle, a_x,ea_y are the translations along the x and y-axis. m(x.y),n(x.y) are the spatial deformation fields along the x and y direction. I_norm (x,y) is the normalized pixel value at (x,y) and I_minimum,I_maximum are the minimum and maximum pixel intensities in the image. G(i,j) is the Gaussian kernel. ω1(t),ω2(t) are the probabilities of the two classes (brain and non-brain) and σ12(t),σ22(t) are the variances of the two classes.

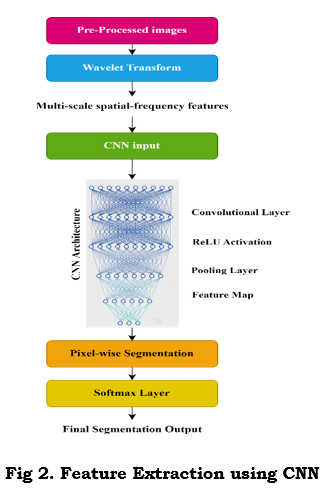

Image Processing and Feature Extraction via CNNs: Wavelet transform, and CNNs complement each other for multi-modal tumor image analysis. Wavelet Transform outlines the spatial-frequency features of multiple scales that help identify fine and coarse structures. Continuous wavelet transform decomposes the input signal with a scalable mother wavelet, as shown in equation (7). The parameters show a localized pattern. While this happens, CNN use filters to convolve the input image for higher-level spatial features, which can be expressed as in equation (8). Further, ReLU activation, as in equation (9), is followed by each convolution to introduce non-linearity. After that, layers are pooled to efficiently down-sample into feature maps while retaining essential details as shown in equation (10). Multi-modal data, such as MRI, CT, and PET, are dealt with as multi-channel inputs or parallel streams by CNNs. The softmax function is used in the final pixel-wise segmentation layer to classify each pixel. This is, therefore, good for precision in tumor detection. This hybrid approach ensures robust spatial-frequency feature extraction along with learned spatial patterns, improving segmentation accuracy for complex medical images. Figure 2 shows this feature extraction using CNN.

where f(t), refers to the Input signal (image intensity in 1D) φ^* is the Mother wavelet, r is the Scale parameter (frequency), τ refer to the Translation parameter (spatial shift). M[l,m] is the Feature map value at position (l,m),I[l,m]Input image pixel value at (l,m), and K[x,y] is the Convolution kernel of size (x,y).P(y_i=c|x_i ) is the Probability of pixel i belonging to class c.

Tumour Segmentation: U-Net is widely applied for brain tumour segmentation because its encoder-decoder structure is ideal for capturing fine-grained details of tumours. The successive convolution and pooling in the encoder capture the image's spatial features, hence tracing patterns like the edges of tumours, necrosis, and oedema. Upsampling in the decoder restores feature maps to reconstruct details in a segmentation map. Skip connections between the encoder and decoder guarantee accurate localization since they can preserve the spatial information required during accurate boundary detection of tumour sub-regions. The architecture is, hence, effective in multi-class segmentation for the labelling of active tumours, necrotic tissue, and oedema using scarce annotated brain imaging data. The equation (12) can do this.

where F_l is the Feature map at layer l, C_l refers to the Convolution filter for layer l, b_l is the Bias term, AF is the ReLU activation function AF(x)=max(0,x), and * is the Convolution operation.

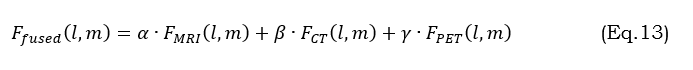

Multi-modal feature fusion leverages MRI, CT, and PET data together to exploit the complementary information provided by these images for the exact segmentation of tumors. This can be achieved by the equation (13).

where α,β,γare weight parameters controlling each modality’s contribution. This is a merged input that provides better segmentation and captures diverse tumor characteristics such as structure, activity, and edema.

Detection of intra-tumour heterogeneity is all about developing a segmented map in which each pixel is labelled as an active tumour-areas with high metabolic activity from PET, necrotic tissue, or dead tissue as determined from MRI or CT, and oedema-swelling as observed through MRI. This pixel-level segmentation enables more sophisticated tumor composition analysis highly relevant to personalized treatment strategies, such as surgery or chemotherapy. U-Net excels in this task using skip connections that preserve low-level and high-level features to detect accurate boundaries. It is capable of multi-class segmentation, effectively labelling various tumor regions, hence capturing heterogeneity. U-Net is also efficient in learning from a limited set of annotated medical images, hence being apt for medical applications. Moreover, it is highly scalable for multi-modal inputs such as MRI, CT, and PET. The improvement in the detection accuracy is for feature fusion from various imaging sources. The combination provides a closer look at a reliable diagnosis with better treatment planning.

Biomarker identification with Random Forests relies on multimodal features segmented by CNN models, including MRI, CT, and PET, to establish a relationship between tumor features and clinical outcomes relative to prognosis or treatment response. RF is an ensemble learning technique using several decision trees on input features, including tumor size, shape, intensity, and texture among others, for classifying tumor types, such as glioblastoma and meningioma, or outcome predictions. It produces robust classification by aggregating the predictions over all trees through majority voting. The most salient strength of RFs is its ranking importance with Gini or, most sensitive of all, permutation importance regarding biomarkers about tumor behavior. Biomarkers serve to predict the growth pattern of tumors, their benignity or malignancy, and the sensitivity of tumors to treatment. The high dimensional capability of RF is superior in handling noisy and non-linear interacting data, rendering it a very powerful tool for biomarker discovery. Biomarkers identified help plan treatment on an individual basis; hence, they act to complement the precision medicine approach in the management of brain tumors.

The treatment planning and decision support utilizing the MMICNNRF workflow integrate biomarkers, tumor characteristics, and predictive outcomes into medical decisions for personalized treatment. This helps to ensure that the treatment strategies will be appropriately tailored for tumor type, molecular profile, and prognosis to improve patient outcomes.

Personalized Therapy Recommendations: Biomarkers from segmentation and RF analysis will, in turn, hint at appropriate treatment modalities. For example, if biomarkers indicate a high level of EGFR mutation in glioblastoma, the suggested workflow should recommend certain specific targeted therapies such as TKIs. On the other hand, radiation therapy may be suggested for tumors with radio-sensitive profiles, while chemotherapy may be advisable for tumors with high proliferative potential. This facilitates not just the optimization of treatment effectiveness but also minimizes unnecessary intervention.

Monitoring and Response Evaluation: Follow-up scans are incorporated into the same pipeline to estimate changes in tumor characteristics after treatment. Longitudinal imaging data, such as MRI and PET images, provide input to the model to assess changes in tumor size, shape, and heterogeneity. RF models compare biomarkers pre- and post-treatment and emphasize responses and resistance patterns in treatment outcomes. In the case of resistance or failure of treatments, the system will recommend other alternative therapies or adjustments in the current one, such as a switch to immunotherapy or a change in drug dosage.

4. Results and discussion

a. Dataset Explanation

The data set was aggregated to train a cyclegan model in image-to-image translation and to translate CT scans to estimated higher-detail MRI scans. It contains CT and MRI scans of brain cross-sections gathered from listed sources and split into train and test subfolders for domains A and B. It is organized into a directory structure to be loaded in a cycle gang implementation for the image-to-image translation.

b. Performance Metrics

This section discusses about the comparison between the proposed MMIETC method with the traditional methods like CEST+MRS [14], DECT [15], AI based PET [17] using the metrics like dice similarity coefficient (DSC), hausdorff distance (HD), sensitivity (True Positive Rate), and AUC-ROC (Area Under the Receiver Operating Characteristic Curve). DSC gives the extent of overlap between the predicted and actual tumor regions. A value close to 1 indicates good segmentation. HD measures the maximum deviation of two sets, ensuring shape precision, especially for the surgical area of interest. Sensitivity: An early diagnosis is made possible by measuring the True Positive Rate, which indicates the model's capacity to identify all cancer-related areas. This helps to reduce false negatives. AUC-ROC is one of the important measures of classification performance where sensitivity and specificity are balanced in a way to dichotomize between tumor and non-tumor regions with different thresholds appropriately. Together, these give the complete assessment of segmentation accuracy, boundary precision, and diagnostic reliability that guides optimized treatment decisions.

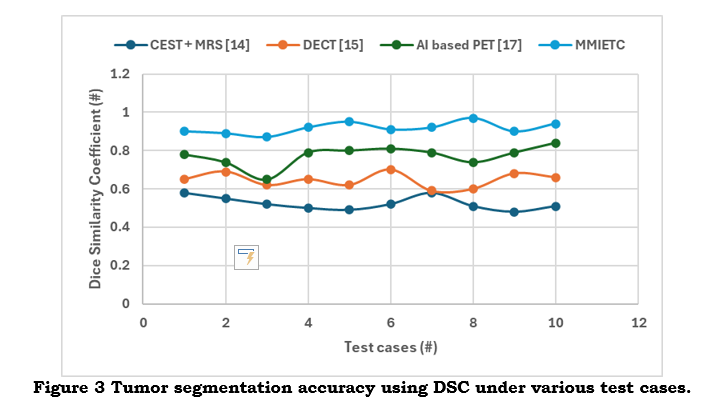

i) Dice Similarity Coefficient (DSC)

The degree to which the dice similarity coefficient shows the expected tumour segmentation and the ground truth overlap. Precision tumor segmentation is essential for medical imaging diagnosis and treatment planning, making it significant. The following equation (14) gives the necessary mathematical expression,

Where X represents the predicted tumor region, Y denotes the tumor region (ground truth). The DSC scale ranges from 0 to 1. Consistent segmentation is essential when integrating multi-modal imaging data and penalizes both over- and under-segmentation. High DSC is necessary to accurately quantify tumors and judge radiation and surgical resection. In clinical contexts, it guarantees precise tumor detection, which enhances treatment planning.

As shown in figure 3, a higher DSC value indicates a more significant overlap between the actual and projected tumor regions, increasing the precision of tumor margin delineation—a crucial step in lowering the probability of postoperative residual tumor. It offers an accurate way to gauge segmentation accuracy, directly affecting the quality of a diagnostic and treatment plan.

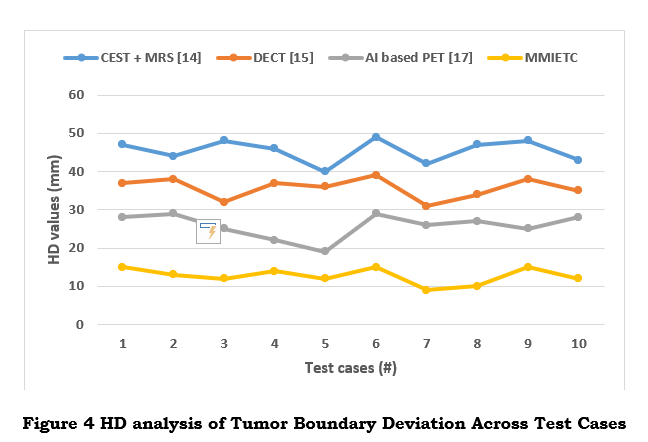

ii) Hausdorff distance (HD)

The Hausdorff Distance determines the most significant separation between the actual and expected tumor boundaries. This statistic is especially helpful for assessing how effectively the segmentation model captures the size and shape of the tumor.

In the above equation (25), d(x,y)mentions the Euclidean distance between the points x and y. Hausdorff Distance (HD), which is sensitive to boundary outliers, is a measure of how well the predicted boundary matches the actual tumour shape, especially in 3D volumetric imaging. HD is essential for maintaining tumour shape accuracy, especially for resections around critical structures. It ensures that deviations are kept to a minimum to prevent influencing clinical decisions on surgical margins. HD guarantees precise delineation of complex tumour geometries for surgical planning.

As shown in figure 4, a low Hausdorff Distance means that the expected and actual tumor boundaries are almost identical to ensure complete tumor excision and accurate surgical planning. reduces the possibility of partial resections and helps with surgical precision by offering comprehensive insights into the accuracy of tumor border forecasts.

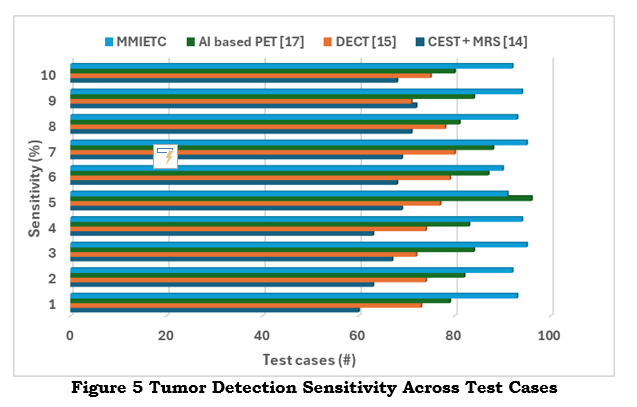

iii) Sensitivity (True Positive Rate)

Sensitivity quantifies the proposed model's ability to detect actual tumor areas. It is a crucial parameter for ensuring that tiny or early-stage tumours are not overlooked because it determines the percentage of tumour pixels accurately identified as tumours. The following equation (16) gives the necessary mathematical expression,

Where TP = True Positives (Correct tumor classifications), FN = False Negatives (missed tumor-affected areas).

High sensitivity guarantees the early and precise diagnosis of tumors, improving patient outcomes by enabling earlier interventions and more focused treatments (shown in figure 5). It is essential for early diagnosis and thorough treatment planning since it lowers false negatives and guarantees that even tiny tumor locations are recognized.

Sensitivity is essential for minimising recurrence after surgery, detecting small or early-stage tumours, and lowering the chance of missing tumours. High sensitivity also makes detecting intra-tumour heterogeneity possible, promoting more focused treatments, and enhancing early diagnosis. Additionally, it guarantees that no tumour remnants remain after surgery, speeding up clinical judgement and reducing diagnostic delays for improved patient outcomes.

Sensitivity is essential for minimising recurrence after surgery, detecting small or early-stage tumours, and lowering the chance of missing tumours. High sensitivity also makes detecting intra-tumour heterogeneity possible, promoting more focused treatments, and enhancing early diagnosis. Additionally, it guarantees that no tumour remnants remain after surgery, speeding up clinical judgement and reducing diagnostic delays for improved patient outcomes.

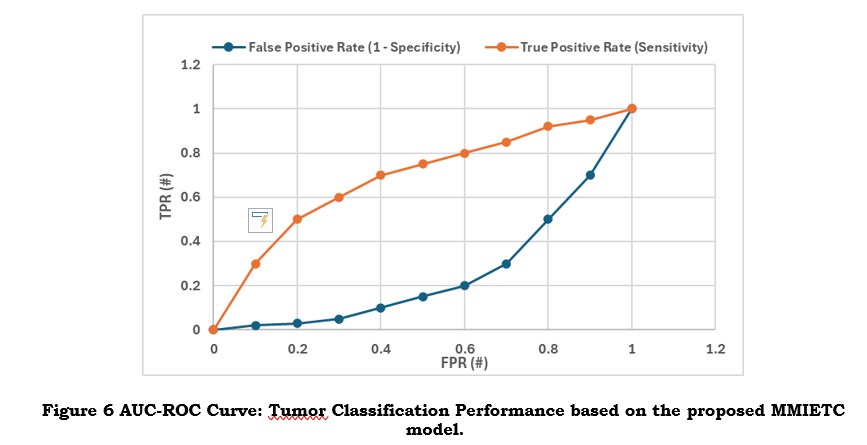

iv) AUC-ROC (Area Under the Receiver Operating Characteristic Curve)

The AUC-ROC statistic compares the actual positive rate (sensitivity) with the false positive rate (1 - specificity) to assess the model's classification performance. It overviews how well the model can differentiate between tumor and non-tumor regions at different threshold values. The mathematical expression is shown in the following equation (17),

A high AUC-ROC value results in more accurate tumor characterization and fewer misclassifications (as shown in figure 6) that the model successfully distinguishes between tumor and non-tumor regions. Benefits include the system balancing identifying tumors and preventing false positives, which is crucial for confidently making treatment decisions.

AUC-ROC balances sensitivity and specificity by assessing the model's performance across all categorisation thresholds. Reducing misclassifications is crucial for the reliable classification of tumour and non-tumor regions. A high AUC-ROC score increases confidence in the model's accuracy across patient groups and imaging modalities to ensure accurate diagnosis and better treatment planning for various tumour forms and grades.

5. Conclusion

The proposed framework of MMICNNRF integrates multi-modality imaging data, namely MRI, CT, and PET scans, through state-of-the-art machine learning techniques. It leverages CNNs for feature extraction and segmentation, and Random Forests for biomarker identification and predictive analytics. The proposed approach has the potential to significantly enhance tumour margin delineation, intra-tumour heterogeneity detection, and personalized treatment planning with consequent improved diagnostic precision and better patient outcomes. By fusing multi-modal inputs, the platform ultimately provides an all-rounded understanding of tumour characteristics that support clinical decision-making with correct segmentations and recommends therapies. Moreover, follow-up scans, which can also be processed in the same workflow, enable observation of the response of treatments over time and dynamic readjustments of the therapy approach. However, the limitation is mainly reliance on multi-modal high-quality datasets that are not available in every case and can affect performance. Future work will focus on optimization of models for more efficient architectures by developing lightweight algorithms that would easily be deployed in real-world settings. Integration of explainable AI techniques is also one of the priorities to bring better insight into decision-making processes of the model for more clinical trust and adoption.

References :

[1]. Overcast, W. B., Davis, K. M., Ho, C. Y., Hutchins, G. D., Green, M. A., Graner, B. D., & Veronesi, M. C. (2021). Advanced imaging techniques for neuro-oncologic tumor diagnosis, with an emphasis on PET-MRI imaging of malignant brain tumors. Current Oncology Reports, 23, 1-15.

[2]. Mathivanan, S. K., Sonaimuthu, S., Murugesan, S., Rajadurai, H., Shivahare, B. D., & Shah, M. A. (2024). Employing deep learning and transfer learning for accurate brain tumor detection. Scientific Reports, 14(1), 7232.

[3]. Green, S., Vuong, V. D., Khanna, P. C., & Crawford, J. R. (2022). Characterization of pediatric brain tumors using pre-diagnostic neuroimaging. Frontiers in Oncology, 12, 977814.

[4]. Pethuraj, M. S., Burhanuddin, M. A., & Devi, V. B. (2023). Improving accuracy of medical data handling and processing using DCAF for IoT-based healthcare scenarios. Biomedical Signal Processing and Control, 86, 105294.

[5]. Vankdothu, R., & Hameed, M. A. (2022). Brain tumor MRI images identification and classification based on the recurrent convolutional neural network. Measurement: Sensors, 24, 100412.

[6]. Bogusiewicz, J., Kupcewicz, B., Goryńska, P. Z., Jaroch, K., Goryński, K., Birski, M., ... & Bojko, B. (2022). Investigating the potential use of chemical biopsy devices to characterize brain tumor lipidomes. International Journal of Molecular Sciences, 23(7), 3518.

[7]. Khan, S. M., Nasim, F., Ahmad, J., & Masood, S. (2024). Deep Learning-Based Brain Tumor Detection. Journal of Computing & Biomedical Informatics, 7(02).

[8]. Khaliki, M. Z., & Başarslan, M. S. (2024). Brain tumor detection from images and comparison with transfer learning methods and 3-layer CNN. Scientific Reports, 14(1), 2664.

[9]. Al-Sarayrah, Ali. "RECENT ADVANCES AND APPLICATIONS OF APRIORI ALGORITHM IN EXPLORING INSIGHTS FROM HEALTHCARE DATA PATTERNS.", PatternIQ Mining.2024, (1)2, 27-39. https://doi.org/10.70023/piqm24123

[10]. Zhou, L., Wang, M., & Zhou, N. (2024). Distributed federated learning-based deep learning model for privacy mri brain tumor detection. arXiv preprint arXiv:2404.10026.

[11]. Smits, M. (2021). MRI biomarkers in neuro-oncology. Nature Reviews Neurology, 17(8), 486-500.

[12]. Othman, G., & Zeebaree, D. Q. (2020). The applications of discrete wavelet transform in image processing: A review. Journal of Soft Computing and Data Mining, 1(2), 31-43.

[13]. Lee, R. Y., Wu, Y., Goh, D., Tan, V., Ng, C. W., Lim, J. C. T., ... & Yeong, J. P. S. (2023). Application of artificial intelligence to in vitro tumor modeling and characterization of the tumor microenvironment. Advanced Healthcare Materials, 12(14), 2202457.

[14]. Hangel, G., Schmitz‐Abecassis, B., Sollmann, N., Pinto, J., Arzanforoosh, F., Barkhof, F., ... & Emblem, K. E. (2023). Advanced MR techniques for preoperative glioma characterization: part 2. Journal of Magnetic Resonance Imaging, 57(6), 1676-1695.

[15]. Foti, G., Ascenti, G., Agostini, A., Longo, C., Lombardo, F., Inno, A., ... & Gori, S. (2024). Dual-Energy CT in Oncologic Imaging. Tomography, 10(3), 299-319.

[16]. Hirschler, L., Sollmann, N., Schmitz‐Abecassis, B., Pinto, J., Arzanforoosh, F., Barkhof, F., ... & Hangel, G. (2023). Advanced MR techniques for preoperative glioma characterization: Part 1. Journal of Magnetic Resonance Imaging, 57(6), 1655-1675.

[17]. Eisazadeh, R., Shahbazi-Akbari, M., Mirshahvalad, S. A., Pirich, C., & Beheshti, M. (2024, February). Application of Artificial Intelligence in Oncologic Molecular PET-Imaging: A Narrative Review on Beyond [18F] F-FDG Tracers Part II.[18F] F-FLT,[18F] F-FET,[11C] C-MET and Other Less-Commonly Used Radiotracers. In Seminars in Nuclear Medicine. WB Saunders.

[18]. Jadoon, E. K., Khan, F. G., Shah, S., Khan, A., & Elaffendi, M. (2023). Deep learning-based multi-modal ensemble classification approach for human breast cancer prognosis. IEEE Access.

[19]. Waqas, A., Tripathi, A., Ramachandran, R. P., Stewart, P. A., & Rasool, G. (2024). Multimodal data integration for oncology in the era of deep neural networks: a review. Frontiers in Artificial Intelligence, 7, 1408843.

[20]. Khalighi, S., Reddy, K., Midya, A., Pandav, K. B., Madabhushi, A., & Abedalthagafi, M. (2024). Artificial intelligence in neuro-oncology: advances and challenges in brain tumor diagnosis, prognosis, and precision treatment. NPJ Precision Oncology, 8(1), 80.

[21]. https://www.kaggle.com/datasets/darren2020/ct-to-mri-cgan