OPTIMIZING ACUPOINT LOCALIZATION LEVERAGING DATA AUGMENTATION IN IMAGE ANALYSIS

Authors :

Hafiz Ismail and Nurul Azman

Address :

Postdoctoral Fellow, Department of Robotics, Monash University Malaysia, Malaysia

Research Scientist, Department of Artificial Intelligence, Universiti Putra Malaysia, Malaysia

Abstract :

The use of acupuncture as a supplementary intervention has been more widely acknowledged in recent years; it is a vital component of traditional Chinese medicine that has been practiced in China for over two thousand years. Precise acupoint localization is thus an important process in acupuncture treatments and hand reflexology; hence, precise identification is critical for effective treatment. However, this accurate localization of acupoints in hand images still faces some challenges regarding variations in skin texture, hand structure, lighting conditions, and image quality. Current techniques do not present perfect accuracy on complex and noisy datasets, resulting in a lack of dependability in methods for therapeutic acupoint identification. This paper proposes an improved framework of the ALDAU-Net for Acupoint Localization (AL) in hand images by combining a U-shaped Network (U-Net) for pixel-level feature extraction and the Random Forest (RF) classification. Different data augmentation (DA) techniques, such as geometric transformation and intensity shifts, will be performed to create a more varied and robust training dataset that improves the model's generalization capability across different hand images. These segmented regions are then classified as an acupoint or a non-acupoint region using an RF classifier after feature extraction with U-Net. RF outperforms many other classifiers for noisy data and provides robust nonlinear classification based on the extracted features. The experimental results show that in difficult cases with a variation of hand orientation and changes in lighting conditions, the proposed U-Net + RF combination improves the localization accuracy by 30% compared to traditional methods. In that respect, the DA further augmented the model's generalisation capability, hence being highly effective when applied to a wide range of hand images.

Keywords :

Acupoint Localization, Data augmentation, U-Net, Random Forest, Feature Extraction, Hand Image Analysis.

1.Introduction

Chinese medical theory defines acupuncture points as special points in the body that can be stimulated to regulate the body's general balance and help relieve pain and inflammation caused by overexertion of muscles and joints [1]. Acupoint localization is an important part of acupuncture and tuina therapeutics; the accuracy of the localisation is directly proportional to the resulting therapeutic efficacy [2]. In general, acupoint detection refers to finding acupoints' location on human skin, which involves certain acupoints and their real positions [3]. According to the text description and diagram, it is possible to locate the acupoints roughly by those with no professional training in acupuncture. Still, there will be some errors for sure, and the location of them accurately is impossible [4]. An emerging field, AI-assisted acupuncture aims to improve therapeutic effects by making acupuncture treatments more precise and effective [5]. The present stage of acupuncture point recognition is being addressed by supervised deep learning, which possesses adaptability and flexibility. There will likely be an uptick in the development and use of AI-based solutions in healthcare systems [6]. Performing the conventional affine and elastic transformations—such as mirroring or rotating the initial picture, zooming in/out, changing, altering, and changing the colour palette—has been the most common and effective approach to data augmentation up to this point [7].

Data augmentation generally increases sufficiency and diversity in training data by generating artificial datasets. The augmented data can be viewed as being extracted from a distribution-like reality [8]. Image segmentation is the main application of the U-net architecture, which is a type of neural network. There are two main pathways that make up U-net architecture. One may call the first path, the contracting path, an encoder path, or an analysis path. Classification data is provided by it, which functions similarly to a standard convolution network. After the contraction path, there is an expansion path called the decoder or synthesis path. It combines features from the contracting path with up-convolution and concatenation [9]. The auto-encoder nature of U-Net made it an exceptional tool in breaching its structure for major applications, e.g., image synthesis, image denoising, image reconstruction, and image super-resolution [10]. The Random Forest Algorithm gives high accuracy because it's an ensemble algorithm. The performance of each method has been tested concerning the standard criteria: precision, specificity, accuracy, and area under the receiver operating characteristic curve (AUC-ROC) [11].

Integrating U-Net for feature extraction and RF for classification forms a novelty in acupoint localization by marrying the best of deep learning with traditional machine learning techniques. This hybrid approach allows us to leverage U-Net's excellent feature extraction capabilities while realizing the interpretability and robustness of RF. Our experimental results demonstrate the successful effect of the ALDAU-Net framework by showing a clear improvement in localization accuracy compared to conventional methods. The accuracy increased by 30% for those challenging cases with considerable variation in hand orientations and lighting conditions. This is an improvement owing to the combination of the fine-grained segmentation capabilities of U-Net and the complex feature space-handling capabilities of Random Forest.

This study's primary contribution is

This paper introduces the ALDAU-Net framework, which represents a new state-of-the-art acupoint detection from hand images by effectively integrating the U-Net into pixel-level feature extraction and Random Forest classification. The model shows better generalization with different augmentation techniques, such as geometric transformations and intensity shifts, increasing robustness across different hand orientations and lighting conditions. It achieves more than a 30% improvement in accuracy compared to the previous traditional methods, especially on noisy and challenging datasets. Moreover, with its ability to handle variations in image quality, it is highly applicable in clinical usage in acupuncture and hand reflexology treatments.

The remaining portion of the paper follows this structure: Section 3 provides an overview of the proposed methodology's structure. Section 4 contrasts the suggested methodology's outcomes with those of more conventional approaches. Part 5 concludes the research.

2. Related work

Lu P. H. et al. [12] designed to apply the Apriori algorithm to identify efficacious acupoint combinations for acupuncture treatment of UP. This helped to bridge the gap in entrenched criteria for selecting acupoints since UP significantly impairs patients' quality of life. 71 association rules were obtained with high lift values, showing strong co-occurrence among acupoint combinations. These results also showed that there were constantly effective combinations, thus providing a basis for standardised acupuncture practices in UP management.

Masood D. & Qi, J. [13] proposed a methodology for Acupoint localisation in three dimensions utilising colour and depth information from a Kinect V1 device. It addressed the inaccuracies of existing 2D methods and used background extraction, training of RGB-CNN and depth-CNN, and feature extraction techniques. These results have yielded a high validation accuracy of 98.70% achieving a localisation error rate below 0.09 on average, ensuring high precision in detecting acupoints. A universal dataset is under a plan that will involve diverse health conditions.

Yang J. B. et al. [14] introduced acupuncture as an alternative therapy for the prevention and treatment of POD in elderly patients. It discussed how POD is a significant problem that involves not only cognitive dysfunction but also an increase in health expenditure due to the clinical burden on healthcare services and reviews clinical and animal basic studies regarding the effects of acupuncture. Results have shown that acupuncture may improve POD by reducing pain and mitigating neuroinflammation; however, methodological flows have several weaknesses in the current study. Further, more stringently designed clinical trials are needed to establish the efficacy and clarify the underlying mechanisms.

Zhao P. et al. [15] proposed the YoloAcuPatient, a deep learning-based method for detecting a patient in a position during the treatment of automated acupuncture. It partly solved the shortage of acupuncturists and the surging demand due to its popularity, especially among elderly people. Based on the YOLO X model, it used a CSPDarkNet backbone, a feature pyramid network, and a new alpha-CIoU loss function. The proposed YoloAcuPatient also gave the best mAP and F1-score when tested on the custom dataset and performed better than the competing cutting-edge approaches, proving efficient for positioning patients in acupuncture automation.

Zhang, W. [16] proposed developing a Traditional Chinese Medicine (TCM) database for acupoint-based diagnosis and treatment. Conventional access to information regarding 311 acupoints and their clinical applications should be provided to users. In general, this project aimed to resolve the fragmentation of knowledge in TCM and to create an environment where better diagnoses and treatments using acupoints could be offered. This provided a structured database that will enable practitioners to locate the relevant information they need to effectively manage patient care and treatment outcomes in traditional Chinese medicine.

Hwang, Y. C. et al. [17] provided a method for analysing acupoint indications using Bayes factor correction, which enhances the specificity of disease-targeted acupoint selection. The conventionally used reverse inference does not consider prior probabilities of diseases. The method yielded the detection of patterns in acupoint selection based on 30 diseases and the identification of consistently prescribed acupoints that give substantial evidence for their therapeutic efficacy in acupuncture treatments.

Li, Y. et al. [18] investigated various artificial intelligence models for extracting spatial relationships of acupuncture points from medical texts-a task so crucial that the precise location of the point directly impinges on treatment effectiveness. Researchers compared GPT-3.5, GPT-4, LSTM, and BioBERT models, paying particular attention to differences between their pre-trained and fine-tuned versions. Fine-tuned GPT-3.5 had the best performance with a 92% accuracy rate regarding understanding complex spatial relationships; thus, it might find its way into standardizing acupuncture training and practice.

Malekroodi, H. S. et al. [19] developed an intelligent computer vision system with the help of the MediaPipe framework to automatically identify and locate acupuncture points on the face and hand. Such a method helps overcome the obstacles practitioners face in the precise location of acupoints due to varying anatomies among patients, which is time- and expertise-consuming. It successfully demonstrated real-time identification of over 40 acupoints on various hand postures; the system's accuracy was validated against expert-labeled data for hand acupoints, and intuitive evaluation was done for facial points by comparison with standard references.

3. Proposed Methodology

a) Dataset Description

3D and 2D hand position estimation and hand area placement are both made possible by the dataset [20]. Multiple sequences make up the dataset. A group of frames constitutes a sequence. For a given time instant, various types of ground truth data are collected into a frame. Here is the info that is based on reality:

Lastly, it should be mentioned that every sequence was recorded under diverse circumstances to guarantee a high level of variability. We evaluated aspects such as subject, time of day, and speed of motion to achieve great variability.

b) Holistic Workflow for the proposed ALDAU-Net Methodology

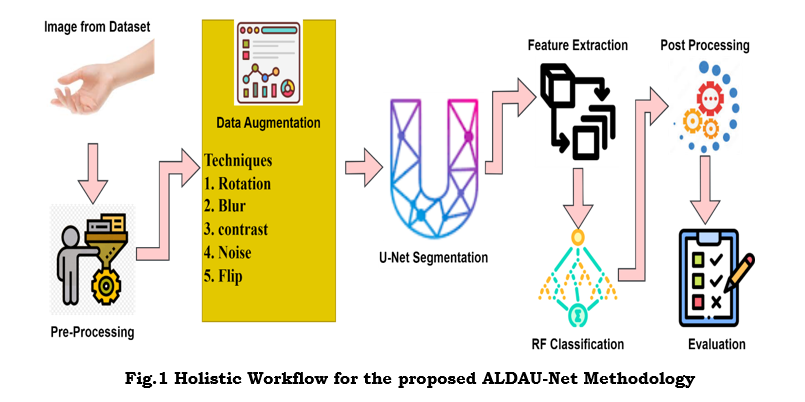

This workflow takes the ground truth for model training as an input hand image with annotated acupoints. Data augmentation will increase the model's robustness by giving different types of image samples through transformations such as geometric changes, intensities, and noise addition. Subsequently, a U-Net segmentation model will perform pixel-level segmentation on these images to detect possible acupoint regions. From these segmented regions, shape, texture, and spatial attributes are extracted for detailed information in further classification. Further, these features went through a Random Forest classifier, categorising the segmented regions as acupoints or non-acupoints based on the learned patterns. In the post-processing phase, refinement techniques have been applied to enhance the accuracy of localized acupoint regions. It finally provides the exact acupoint map tested for performance metrics for precision and reliability. The following section explains the step-by-step process of the ALDAU-Net methodology, as illustrated in Figure 1.

i) Image Acquisition

It starts by acquiring hand images from a dataset or by capturing them directly from any imaging device, such as a camera, infrared sensor, or depth sensor. The variety of conditions, orientation, and illumination, among other factors, of the original hand images acquired from the dataset introduce processing challenges.

ii) Image Pre-Processing

Preprocessing transforms the raw hand images into a consistent and usable format for further processing. This is done to ensure that all the images are standardized in size, orientation, and lighting so that meaningful features can be extracted from them. Resizing: It ensures that all the hand images have the exact dimensions, making them uniform for further processing. Orientation normalization normalizes the hand's pose to remove any rotational or tilt component, making it standardised in positioning. Illumination normalization reduces the impact of various lighting conditions. It increases contrast and visibility, while skin segmentation separates the hand from the background to ensure that only the relevant region is processed. All three preprocessing steps minimize the variability in images so that more accurate and reliable analyses can occur.

iii) Data Augmentation

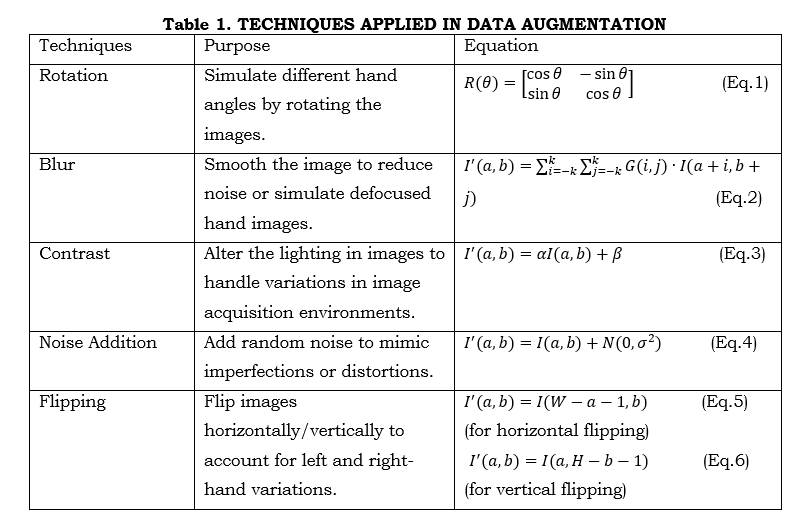

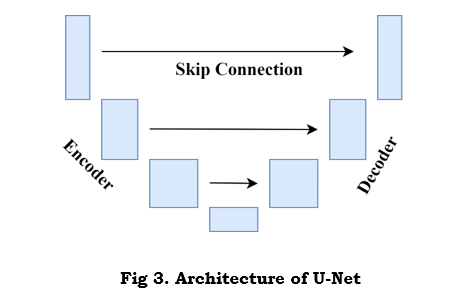

Data augmentation is one of the most critical steps since it increases robustness and diversity within a training dataset, enhancing a model's generalization capability for various types of hands, sizes, orientations, and other environmental conditions. By considering various augmentation techniques, this makes the data more realistic; therefore, it reduces overfitting in the model. The main goal of data augmentation is to simulate differences in the input images without further collection of real-world data. The model will have more opportunities to learn general features, which might increase its performance for different conditions: hand size, angle, illumination, and noise level. Table 1 shows the techniques involved in the data augmentation. Rotation can be done using Equation 1. The original image is blurred by Equation 2, and the image's lighting is adjusted using Equation 3. Noise is added to the image by Equation 4. Finally, the image can be flipped horizontally by Equation 5 or vertically by Equation 6.

where I^' (a,b) is the output image at each stage. I(a,b) is the original image. a,b are the coordinates of the images, sinθ,cosθ are the trigonometric functions used to calculate the new position of the pixel after rotation by angle θ. α This controls the contrast of the image. A value of α>1 increases the contrast while 0<α<1 reduces it. β this shifts the intensity values uniformly across the image. Increasing β will brighten the image while decreasing it (e.g., negative β) will darken the image. N(0,σ^2 ) Represents a Gaussian (normal) distribution with mean 0 and variance σ^2, where σ controls the intensity of the noise. W is the width of the image and H is the height of the image. Figure 2 shows the various outputs for the techniques in data augmentation.

Figure 2 shows that data augmentation enhances robustness and generalization by simulating real variations in illumination, noise, and hand orientation. These variations will make the model perform well under different conditions, ensuring more accurate and reliable acupoint localization.

iv) U-Net Architecture for Feature Extraction (Segmentation)

Input to the U-Net model is augmented hand images. These images show the hand in various poses, illuminations, and orientations; hence, they are preprocessed-cropped, resized, and normalized before passing into the network.

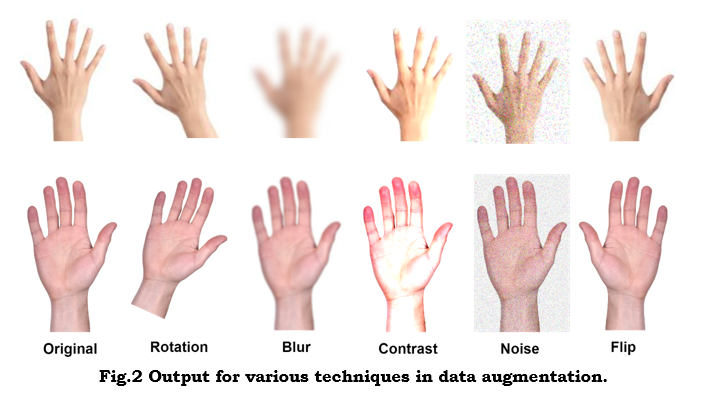

The U-Net architecture comprises mainly two halves. Figure 3 shows the U-Net architecture.

1. Encoder (Contracting Path): This is meant to downsample the input image to acquire high-level features with reduced spatial resolution. The encoder extracts Features hierarchically through progressive down-sampling of the image via convolutional and pooling layers.

Convolutional Layers: Feature maps are generated using a convolution operation to capture edges, textures, and high-level structures. A convolution operation is given in equation 7 as follows:

where f(a,b) is the feature map value at the position a,b. W(i,j) represents the convolution filter weights. I(a+i,b+j) is the input image pixel or the output from the previous layer. bt is the biased term, and k is the size of the filter kernel.

Pooling Layers: The max-pooling layers reduce spatial dimensions, retaining the most important information. At this step, context is captured by reducing an image's resolution.

2. Decoder (Expanding Path): The decoder can then use this information to enable pixel-wise classification by upsampling the feature maps to the original input size. It works toward restoring the spatial resolution to localize objects, like acupoints, in the input image.

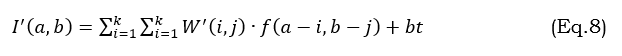

Up-sampling: Feature maps are progressively up-sampled by transposed convolution or bilinear interpolation to increase the spatial resolution. The upsampling process can be given as equation 8.

where W^' (i,j) is the filter for up-sampling and f(a-i,b-j) is the down-sampled feature map.

Convolutional Layers: After up-sampling, a convolutional layer is applied again to produce accurate feature maps of high spatial resolution.

Skip Connections: These skip connections between the encoder, and decoder ensures fine-grained information from earlier layers and high-resolution details that help the decoder recover spatial precision lost during down-sampling. A skip connection concatenates the encoder’s feature maps with the decoder's feature maps. This will ensure that both high-level and low-level features are considered during the reconstruction of the segmentation map.

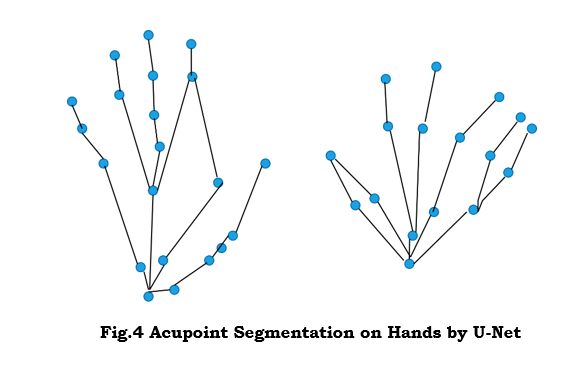

The U-Net outputs a segmentation map, essentially a pixel-level classification map where each pixel is classified into either ROI or background. In acupoint detection, the segmented regions correspond to possible acupoint regions. Figure 4 shows the segmentation of acupoint by U-Net.

As seen in Figure 4, the U-Net segmenting acupoint areas from hand images. The design incorporates a shrinking path for extracting features and an expanding path for performing the final, accurate pixel-level categorisation. This further helps in accurately localising acupoints, considering that the orientation of hands or lighting conditions may vary quintessential need for reliable acupuncture therapy.

v) Feature Extraction from Segmented Regions

After segmenting out potential acupoint regions, the U-Net performs feature extraction to classify these segmented regions further. The features extracted could be the shape descriptors, such as size, contour, and aspect ratio of the regions that give information about the geometry. Skin texture around acupoints will be represented by extracting features using LBP or Haralick texture features. In addition, coordinates or spatial relations among the segmented regions can be extracted as spatial features. Combined, these form the input to the Random Forest classifier, which will classify the region as an acupoint or a non-acupoint one.

vi) Random Forest Classification

The RF model has been trained on a labelled dataset where every segmented region is labelled as an acupoint or non-acupoint. As part of its training process, RF builds an ensemble of decision trees. These trees are trained via bootstrap sampling, which involves training them on separate subsets of the dataset and feature space. To introduce diversity in decision-making at each tree level, each internal node will select a random subset of features. Then, the decision tree will heuristically split the data into partitions concerning the most informative feature at each given node to optimally separate acupoint and non-acupoint regions. This is done for each tree in the forest; hence, RF learns many patterns and relations between feature vectors and their corresponding class labels (acupoint vs. non-acupoint).

vii) Post Processing

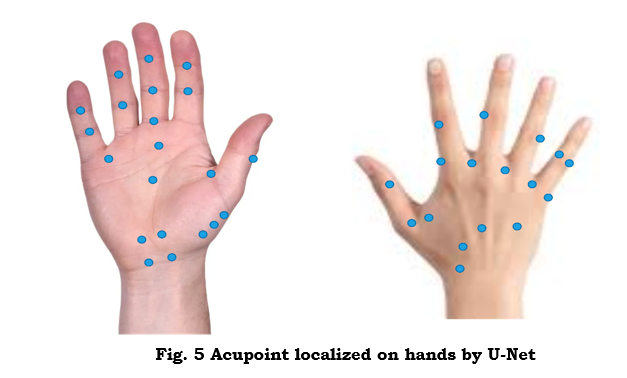

It refines the initial acupoint localization to achieve better accuracy. This step applies to filter techniques such as removing regions based on size or shape constraints that do not meet some geometric criteria of a falsely detected acupoint. For example, areas that are too small, too large, or have a weird shape could always be rejected. Smoothing techniques would then be used to refine and 'heal' the boundaries of the areas detected so that the result would be more accurate. This results in a localized acupoint map, which is the exact location of the acupoints on the hand, enabling increased precision and reliability of the system. Figure 5 shows the final output that identifies the acupoint in hand.

Figure 5 shows the result of acupoint localization on hand images by the ALDAU-Net framework. After segmentation by U-Net and classification by Random Forest, the exact position of acupoints is mapped onto the hand. This figure shows how the model can further improve the accuracy and robustness, especially for those complex variations of hand orientation, size, and lighting conditions which are often ignored, to ensure acupoint identification in clinical applications. Figure 1 graphically depicts evidence of the improved accuracy by incorporating deep learning into the machine learning system.

4. Results and discussion

a. Performance Metrics

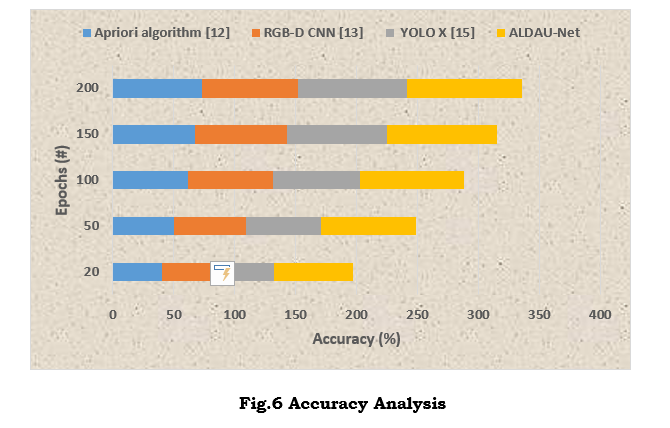

Here, standard approaches such as the Apriori algorithm [12], RGB-D CNN [13], and the YOLO X [15] are contrasted with the suggested ALDAU-Net methodology using measures such as F1-Score, Precision and Recall, Accuracy, and Localization Error.

i) Accuracy

Accuracy measures the number of acupoints (both positive and negative) that were accurately recognized as a percentage of all predictions. It reveals the model's aptitude for differentiating between acupoint and non-acupoint areas. It can be achieved by equation 9.

where TP (True Positives) are the correctly identified acupoints, TN (True Negatives) refers to the correctly identified non-acupoint regions, FP (False Positives) are the non-acupoint regions incorrectly identified as acupoints. FN (False Negatives) are the missed acupoints (acupoints incorrectly classified as non-acupoint regions).

Figure 6 presents the accuracy analysis of the ALDAU-Net framework compared with different acupoint localization methods. The outstanding performance of ALDAU-Net in contrast to the traditional methods using the Apriori algorithm, RGB-D CNN, and YOLO X is reflected in the graph. This figure also depicted that ALDAU-Net shows an enhanced acupoint detection accuracy by up to 30%, especially in worse conditions due to noise or changes in the orientation and illumination of the hand. That is, the framework performed better given the accuracy rate in distinguishing between acupoint and non-acupoint regions, which is clinically applicable.

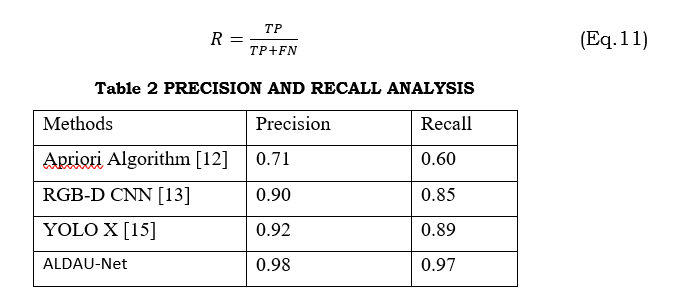

ii) Precision and Recall

The accuracy rate of the acupoint regions detected by the model is called precision. Precision can be achieved through equation 10. The main point of emphasis is the model's capacity to prevent incorrect acupoint predictions or false positives.

A low number of false positives and a high level of precision suggest that the model is diligent.

The number of real acupoints that the model accurately recognised is called recall, which is also called sensitivity or valid positive rate. It focuses on how well the model detects all proper acupoints, avoiding false negatives (missed detections). This can be calculated by equation 11.

Apriori Algorithm, RGB-D CNN, YOLO X, and the suggested ALDAU-Net framework are some of the acupoint localisation approaches compared in Table 2 according to their recall and precision. It illustrates that ALDAU-Net had the best performance among all the mentioned methods, characterized by a precision of 0.98 and a recall of 0.97. This indicates its better performance in reducing the number of false positives, namely, non-acupoints that are wrongly marked as acupoints, while correctly identifying true acupoints. The table underlines that ALDAU-Net is effective in challenging conditions and, hence, more robust and reliable clinically for acupoint localization.

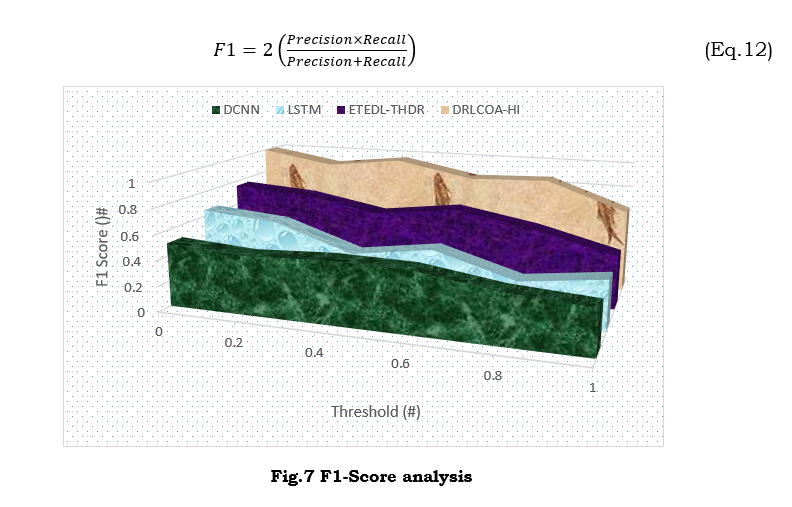

iii) F1- Score

The F1 Score measures both recall and precision using a harmonic mean. Combining the two provides a single statistic that gives more weight to lower scores. This characteristic comes into its own when the dataset is too biased toward areas without acupoints instead of those with them. Equation 12 can be used to calculate the F1 Score.

Figure 7 illustrates the F1-Score analysis among acupoint localization methods: Apriori Algorithm, RGB-D CNN, YOLO X, and ALDAU-Net. Since the F1-Score integrates precision and recall, this proves that ALDAU-Net was superior to all the other methods with the highest score. Indeed, this superior performance suggests that ALDAU-Net is more reliable in the location of acupoints with high accuracy, balancing both precision and recall even in challenging image variations caused by noise, hand orientation, or lighting conditions. This figure underlines the robustness of ALDAU-Net in clinical settings.

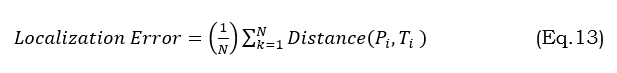

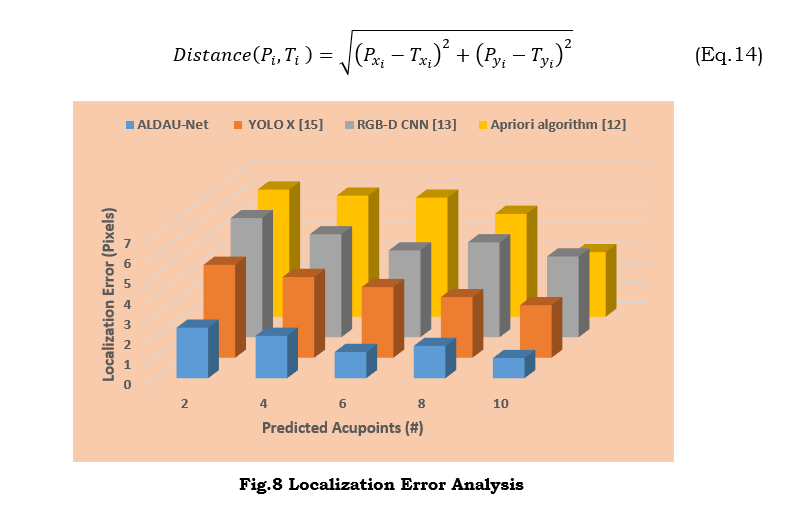

iv) Localization Error

Localization error measures the distance between the projected acupoints and their actual ground truth placements. Acupoint localization relies heavily on spatial precision. It is obtained by equation 13.

where N is the overall count of acupoints in the image, P_i is the predicted position of the i-th acupoint. T_i is the true position of the i-th acupoint. Distance is usually measured using the Euclidean distance, as explained in equation 14.

Figure 8 presents acupoint detection methods such as ALDAU-Net, YOLO X, RGB-D CNN, and the Apriori Algorithm. As can be derived from the figure, the lowest localization error belongs to ALDAU-Net, which means it is the most precise method to locate the exact position of the acupoints. Thus, the lower error rate of the framework shows its capability to locate acupoints precisely in complex conditions that include different hand orientations and systems of lighting. The figure suggests that ALDAU-Net demonstrates higher performance on spatial precision, which is highly effective in clinical applications with the highest demand for precise acupoint localization.

5. Conclusion

The proposed framework of ALDAU-Net has remarkably enhanced the precision of acupoint localization by incorporating the Combining U-Net with Random Forest for feature extraction for classification, attaining as high as a 30% increase compared to the traditional methods. Applying geometric transformations and intensity shifts, this framework strengthens model generalization across various hand images. It allows for the reliable identification of acupoints with challenging conditions, such as different hand orientations and sizes, and variations in lighting. The experimental results show that ALDAU-Net is great for acupuncture and hand reflexology in the real world since it has the lowest localisation error and beats the current state-of-the-art approaches in terms of recall, precision, and F1 score. This system integrates deep learning and machine learning techniques to guarantee robust and dependable acupoint localization with reduced false positives, ensuring correct acupoint detection even in noisy datasets. However, it must be noted that one limitation of this study involves considering a comparatively small dataset, which could affect the model's performance when generalized to represent larger and more diverse populations with changing hand shapes and disease conditions. The work presented here can be improved further by expanding the dataset with various types of hands and advanced augmentation techniques and considering other algorithms for classification.

References :

[1] Wang, H., Liu, L., Wang, Y., & Du, S. (2023). Hand acupuncture point localisation method based on a dual-attention mechanism and cascade network model. Biomedical Optics Express, 14(11), 5965-5978.

[2] Yuan, Z., Shao, P., Li, J., Wang, Y., Zhu, Z., Qiu, W., ... & Han, A. (2024). YOLOv8-ACU: improved YOLOv8-pose for facial acupoint detection. Frontiers in Neurorobotics, 18, 1355857.

[3] Zhang, T., Yang, H., Ge, W., & Lin, Y. (2023). An image‐based facial acupoint detection approach using high‐resolution network and attention fusion. IET Biometrics, 12(3), 146-158.

[4] Yu, Z., Zhang, S., Zhang, L., Wen, C., Yu, S., Sun, J., ... & Gan, Y. (2024). Design and Performance Evaluation of a Home-Based Automatic Acupoint Identification and Treatment System. IEEE Access, 12, 25491-25500.

[5] Bao, Y., Ding, H., Zhang, Z., Yang, K., Tran, Q., Sun, Q., & Xu, T. (2023). Intelligent acupuncture: data-driven revolution of traditional Chinese medicine. Acupuncture and Herbal Medicine, 3(4), 271-284.

[6] Al-Sarayrah, Ali. "RECENT ADVANCES AND APPLICATIONS OF APRIORI ALGORITHM IN EXPLORING INSIGHTS FROM HEALTHCARE DATA PATTERNS.", PatternIQ Mining.2024, (1)2, 27-39.

[7] Dhote, S., Baskar, S., Shakeel, P. M., & Dhote, T. (2023). Cloud computing assisted mobile healthcare systems using distributed data analytic model. IEEE Transactions on Big Data.

[8] Yang, S., Xiao, W., Zhang, M., Guo, S., Zhao, J., & Shen, F. (2022). Image data augmentation for deep learning: A survey. arXiv preprint arXiv:2204.08610.

[9] Siddique, N., Paheding, S., Elkin, C. P., & Devabhaktuni, V. (2021). U-net and its variants for medical image segmentation: A review of theory and applications. IEEE access, 9, 82031-82057.

[10] Azad, R., Aghdam, E. K., Rauland, A., Jia, Y., Avval, A. H., Bozorgpour, A., ... & Merhof, D. (2024). Medical image segmentation review: The success of u-net. IEEE Transactions on Pattern Analysis and Machine Intelligence.

[11] Shiny, K. V., Ajnabi, A. K., Kumar, A., Singh, B. K., & Gupta, A. (2024). A Machine Learning Approach for Breast Cancer Detection using Random Forest Algorithm. International Journal of Research in Engineering, Science and Management, 7(4), 14-18.

[12] Lu, P. H., Lai, C. C., Chiu, L. Y., Lin, I. H., Iou, C. C., & Lu, P. H. (2024). An Apriori algorithm-based association rule analysis to identify acupoint combinations for treating uremic pruritus. Tzu Chi Medical Journal, 36(2), 195-202.

[13]. [13] Masood, D., & Qi, J. (2022). 3D Localization of hand acupoints using hand geometry and landmark points based on RGB-D CNN fusion. Annals of Biomedical Engineering, 50(9), 1103-1115.

[14] Yang, J. B., Wang, L. F., & Cao, Y. F. (2023). Advances in the prevention and treatment of postoperative delirium by acupuncture: A review. Medicine, 102(14), e33473.

[15] Zhao, P., Pang, L., Cao, S., & Cao, Z. (2024, March). Deep learning-based patient in-position detection for acupuncture treatment. In Computational Optical Imaging and Artificial Intelligence in Biomedical Sciences (Vol. 12857, pp. 157-165). SPIE.

[16] Zhang, W. (2023). Information system of acupoint diagnosis and treatment in Traditional Chinese Medicine. Network Biology, 13(2), 53.

[17] Hwang, Y. C., Lee, I. S., Ryu, Y., Lee, Y. S., & Chae, Y. (2020). Identification of acupoint indication from reverse inference: data mining of randomized controlled clinical trials. Journal of Clinical Medicine, 9(9), 3027.

[18] Li, Y., Peng, X., Li, J., Zuo, X., Peng, S., Pei, D., ... & Hong, N. (2024). Relation extraction using large language models: a case study on acupuncture point locations. Journal of the American Medical Informatics Association, ocae233.

[19] Malekroodi, H. S., Yi, M., and Byeong-il Lee, B. (2023) A Computer Vision Approach for Identifying Acupuncture Points on the Face and Hand Using the MediaPipe Framework.

[20] Gomez-Donoso, F., Orts-Escolano, S., & Cazorla, M. (2019). Large-scale multiview 3d hand pose dataset. Image and Vision Computing, 81, 25-33.